Doomsday bunker and AGI “rapture”: Inside OpenAI’s existential tightrope

05/26/2025 / By Willow Tohi

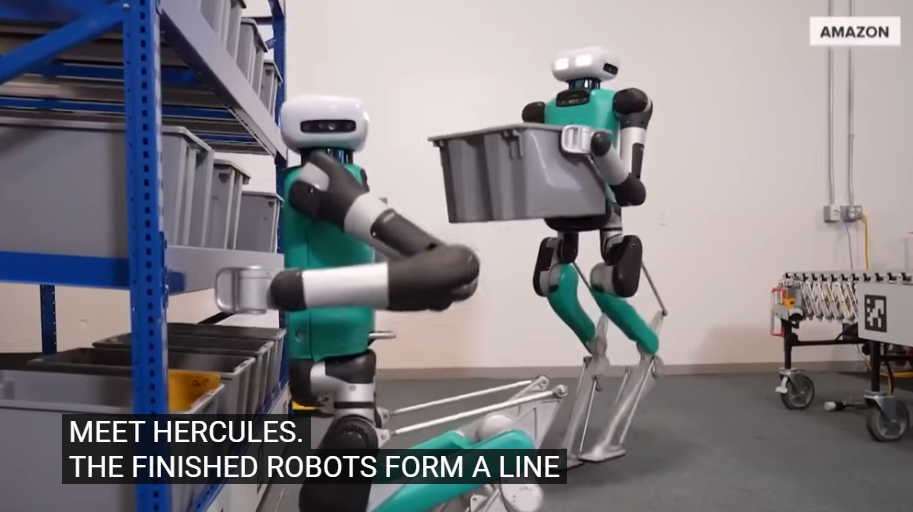

- OpenAI’s former chief scientist Ilya Sutskever proposed building a “doomsday bunker” to shelter researchers from existential risks posed by artificial general intelligence (AGI).

- Sutskever’s bunker idea emerged in 2023 amid internal tensions over leadership and AI safety protocols, culminating in a failed coup to oust CEO Sam Altman.

- The plan reflects growing unease among AI experts who fear AGI could trigger geopolitical chaos, societal collapse or a “rapture”-like scenario.

- OpenAI disbanded its AI safety team in 2023, highlighting the tension between corporate ambitions and existential risk mitigation.

- The bunker concept underscores the industry’s dual role as both innovator and potential harbinger of existential threats.

On a sweltering summer morning in 2023, an OpenAI scientist interrupted Ilya Sutskever mid-sentence during a meeting about the future of artificial intelligence (AI). “The bunker?” the confused researcher asked. “Once we all get into the bunker…” Sutskever, one of AI’s most influential minds, had just sketched a plan to build a fallout shelter for his team — a literal refuge they might need once the “rapture” of artificial general intelligence arrived. Former OpenAI leaders, including Sutskever and Sam Altman, have long debated what AGI could unleash, but this bunker proposal symbolizes the industry’s fraught dance between ambition and anxiety.

The bunker plan

In interviews with The Atlantic, Sutskever’s colleagues described a scientist gripped by what one called a true “rapture” mindset — the belief that AGI could either elevate humanity to a new plane or spell its end. Sutskever, who has long argued AI may achieve human-like consciousness, told researchers in a 2023 meeting: “We’re definitely going to build a bunker before we release AGI,” adding, “Of course, it’s going to be optional.”

The bunker’s purpose was twofold: shield developers from a post-AGI world rife with conflict over control of the technology, and perhaps even act as a staging ground to influence how AGI evolves. Sources suggest Sutskever viewed the move not as extreme, but as prudent, given his assumption that AGI would outpace human control.

The coup plot, corporate ambition and fallout

Sutskever’s bunker idea emerged amid internal discord at OpenAI. By late 2023, he and CTO Mira Murati pushed to remove Altman, accusing him of prioritizing corporate expansion over safety. “I don’t think Sam is the guy who should have the finger on the button for AGI,” Sutskever reportedly told the board. Altman’s reinstatement after a days-long coup — a period internally dubbed “The Blip” — crumbled Sutskever’s resolve, who later departed OpenAI in early 2024.

The clash mirrors broader industry tension: how far should companies go to profit from AI while mitigating risks? In 2023, Altman himself warned governments AI poses an “extinction risk,” yet OpenAI shuttered one of its core safety teams that same year, citing resource constraints. Critics argue profit motives increasingly eclipse caution.

AGI’s geopolitical gamble

Sutskever’s bunker proposal resonates in today’s tech landscape, where China and the U.S. are racing to dominate AI. The Pentagon, meanwhile, is scrambling to keep pace with planes and drones trained by AI—a realm where AGI could rewrite global power dynamics.

Historical parallels loom. During the Cold War, policymakers built bunkers to prepare for nuclear war; today, tech leaders debate digital-age equivalents. Elon Musk’s latest warning — “AGI is the most dangerous event in history”— epitomizes this anxiety. Yet others dismiss AGI as sci-fi hubris, arguing its achievability remains unclear.

A sign of prudence — or desperation?

The bunker idea faded with Sutskever’s departure, but his vision lives on as a symbol of AI’s paradox: the brighter its potential, the darker its risks. For national security experts, it underscores the urgency of global governance—something the U.S. has yet to deliver. “If tech companies are preparing private bunkers, what does that say about public safety planning?” asks Eric Schmidt, former Google CEO and board member of the U.S. AI Initiative.

As OpenAI rebuilds under Altman’s leadership, the failed coup and Sutskever’s bunker serve as reminders: in the age of AI, hubris and humility walk hand in hand.

The AGI rapture and the future of human ingenuity

Ilya Sutskever’s bunker proposal — ridiculed as alarmist by some, heralded as prophetic by others — reveals the deepening rift between tech innovators and the existential questions their creations provoke. Whether AGI brings utopia, apocalypse, or something mundane rests not just on code, but on the choices humanity makes today. The lesson from Sutskever’s “Blip”? Sometimes, preparing for the worst isn’t paranoia — it’s survival.

Sources for this article include:

Submit a correction >>

Tagged Under:

AGI, AI, Big Tech, big techt, bunker, Collapse, computing, cyber war, Dangerous, doomsday bunker, Elon Musk, future science, future tech, Glitch, information technology, inventions, OpenAI, preparedness, rapture, Sam Altman, SHTF, survival, technocrats

This article may contain statements that reflect the opinion of the author

RECENT NEWS & ARTICLES

COPYRIGHT © 2017 ROBOTICS.NEWS

All content posted on this site is protected under Free Speech. Robotics.News is not responsible for content written by contributing authors. The information on this site is provided for educational and entertainment purposes only. It is not intended as a substitute for professional advice of any kind. Robotics.News assumes no responsibility for the use or misuse of this material. All trademarks, registered trademarks and service marks mentioned on this site are the property of their respective owners.