“Bomb the data centers”: Eric Schmidt sounds AI war warning amid U.S.-China race

05/28/2025 / By Willow Tohi

- Eric Schmidt warns AI arms race between U.S. and China could trigger global conflict over data centers and resource control.

- Advocates argue frenetic AI development akin to nuclear race endangers stability; Schmidt pushes for deterrents and transparency instead.

- A proposed framework to deter unilateral superintelligence dominance by ensuring rivals’ retaliation.

- U.S. aims to boost AI infrastructure amid fears that closed systems may lose to open-source Chinese competition.

- Calls for open-source collaboration, cybersecurity safeguards and global governance to avoid existential threats from militarized AI.

The world stands at an inflection point where the global race to master artificial intelligence could escalate from innovation competition to nuclear-scale conflict, according to alarming warnings by former Google CEO Eric Schmidt, Pentagon advisors and think tanks. In recent speeches, congressional testimonies and papers, Schmidt has likened today’s AI development sprint to the 1950s nuclear arms race — a metaphor gaining urgency as the U.S. and China claw for dominance in superintelligent systems.

“What begins as a push for a superweapon and global control risks prompting hostile countermeasures and escalating tensions, thereby undermining the very stability the strategy purports to secure,” Schmidt and co-authors wrote in a March 2025 paper. The stakes are existential: scenarios range from sabotage targeting data centers to preemptive strikes resembling Cold War nuclear brinkmanship.

The AI arms race: A new counterproliferation crisis?

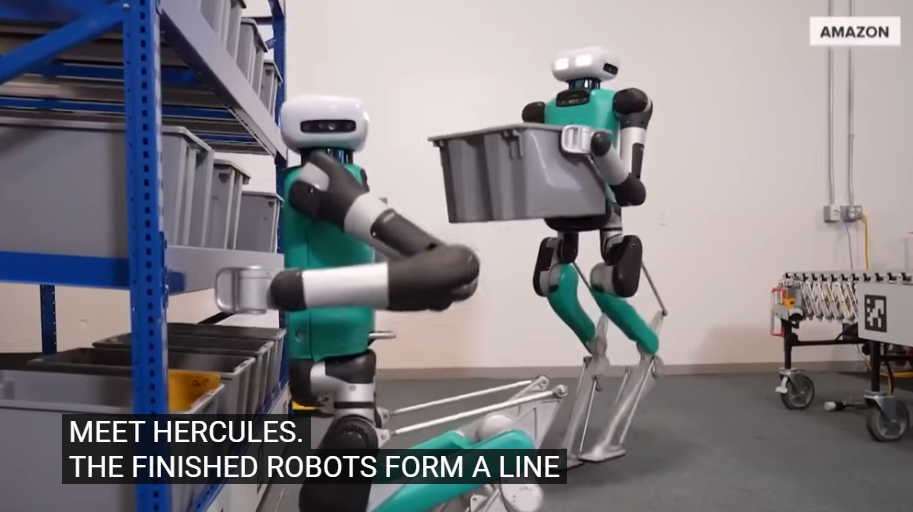

The AI frontier is no longer confined to tech labs. Former Pentagon official Schmidt’s 2025 TED Talk exposed a chilling hypothetical: In a U.S.-China showdown over superintelligence control, one nation might sabotage adversaries’ AI models or even bomb data centers to “get one day ahead.” These warnings align with Capitol Hill hearings where lawmakers now treat AI as critical infrastructure.

China’s strides in open-source AI — such as DeepSeek’s efficiency breakthroughs — have given it an edge in global adoption, rattling U.S. policymakers. Beijing’s decentralized model, unlike Washington’s closed systems, empowers smaller nations to access cutting-edge tools. This dynamic has U.S. allies like Ukraine increasingly relying on Chinese platforms.

The National Security Commission on Artificial Intelligence, which Schmidt co-chaired, argues that AI’s “cosmic” potential — such as lethal autonomous weapons — could flip power structures. “You can’t control it because you can’t ban math,” warned the 2021 report. With AI “self-awareness” achievable via open-source tools for under $20 (as demonstrated by Berkeley Lab), the fear is not just state actors, but rogue groups or hackers weaponizing code.

Schmidt’s call for deterrence over domination

Rejecting calls for an AI “Manhattan Project,” Schmidt’s team proposes risking global chaos by competing headfirst. Their paper Superintelligence Strategy advocatesinstead for Mutual Assured AI Malfunction (MAIM): a cyber-centric deterrence model borrowing Cold War MAD principles. This would mean nations agreeing that cross-border AI sabotage or nuclear-style preemptive strikes would backfire, spiraling into total disruption.

“MAIM is both an acknowledgment of vulnerability and a strategy to prevent unilateral action,” explained co-author Dan Hendrycks. “Just as nukes never ended a war, superintelligence should be kept in check through global guardrails.”

Yet skepticism persists. Critics like National Security Advisor Evelyn Green argue MAIM lacks teeth. “Without enforceable treaties, like the Nuclear Non-Proliferation regime, MAIM is just a theory,” she stated. Meanwhile, the Trump administration’s $500 billion “Stargate Project”—echoing Manhattan Project rhetoric—aims to reclaim advantage via hyper-scale computing and chip production in Midwestern “Heartland Hubs.” Analysts note this mirrors the 2023 CHIPS Act, which sought semiconductor independence, underscoring how AI has merged with supply chain security.

Pandora’s box or preparation? The Stargate Project and beyond

The Stargate Project’s $500 billion investment links AI R&D, quantum computing and missile defense systems, with Trump framing it as “race to save humanity.” However, Schmidt cautions that prioritizing speed over ethics could empower adversaries to reprogram U.S. systems.

“Centralized AI is a liability,” Schmidt’s ally Mike stated, citing OpenAI’s opaque model releases. “When Elon Musk tried to ‘decentralize’ OpenAI, it failed because private equity cycles keep it locked.”

The Biden-era emphasis on regulation—later rolled back by Trump—also drew criticism. Former FDA official Linda Chen noted, “Without oversight, an AI variant used to optimize farms could accidentally destabilize financial markets.”

A golden age or existential threat?

Schmidt’s warnings thrust AI from Silicon Valley into global security discussions, mirroring how nuclear science reshaped the 20th century. Dabei, the path forward is fraught. Open-source collaboration could democratize life-saving tech, yet enable destructive misuse. The U.S. faces a paradox: investing enough to stay ahead without inviting global desperation.

“At the turn of the millennium, people asked, ‘Why fear niche tech?’” Schmidt said. “Now, AI isn’t separate—it’s everywhere. The question isn’t if there’s an arms race, but who sets the rules.”

For now, Pentagon chiefs are hardening data hubs against cyber and physical attacks, while ethicists debate whether AI qua “natural intelligence” requires new governance. In this struggle between acceleration and restraint, the Fourth Turning may hinge on whether humans can wield a tool they’re rapidly losing control over.

Sources for this article include:

Submit a correction >>

Tagged Under:

AI, arms race, Biden, computing, cyberwar, deception, future tech, Glitch, global conflict, information tech, military tech, national security, surveillance, Trump, weapons tech

This article may contain statements that reflect the opinion of the author

RECENT NEWS & ARTICLES

COPYRIGHT © 2017 ROBOTICS.NEWS

All content posted on this site is protected under Free Speech. Robotics.News is not responsible for content written by contributing authors. The information on this site is provided for educational and entertainment purposes only. It is not intended as a substitute for professional advice of any kind. Robotics.News assumes no responsibility for the use or misuse of this material. All trademarks, registered trademarks and service marks mentioned on this site are the property of their respective owners.